For more than a decade, marketers have theorized about how to be more strategic in attracting and persuading the right audiences. Through these postulations, we were brought persona-based marketing, celebrity endorsements, and viral video schemes. As a marketer myself, I can appreciate these contributions to the discipline of marketing and advertising. I can also attest to the ineffectiveness of these (or any other) tactics when the overall strategy is not optimized based on real-world customer data. There is not a single marketing channel in the world that possesses the unicorn-like capacity to increase your sales without a sound optimization strategy in place.

Table of Contents

ToggleEnter A/B Testing

Part science, part art, A/B testing helps marketers focus their efforts and advertising dollars on the right channels, with the right message. Think of it like the nirvana of marketing. When you understand your audience’s beliefs and desires, everything else flows naturally. If mastering the concept of A/B testing seems like a daunting task, fear not! A/B testing is just a form of hypothesis testing (art) under a controlled setting (science).

Why A/B Test?

A/B testing is the only way to gather objective data about your audience. Surveys, for example, introduce artificial emotional filters. Focus groups, while qualitative, are not a scalable solution to gathering customer feedback. The reality is that any form of data collection that relies on human interpretation is subject to bias and is simply not as reliable as empirically gathered data. A/B testing offers an attractive promise to marketers in that, when executed correctly, it leaves no room for bias.

The Basics of A/B Testing

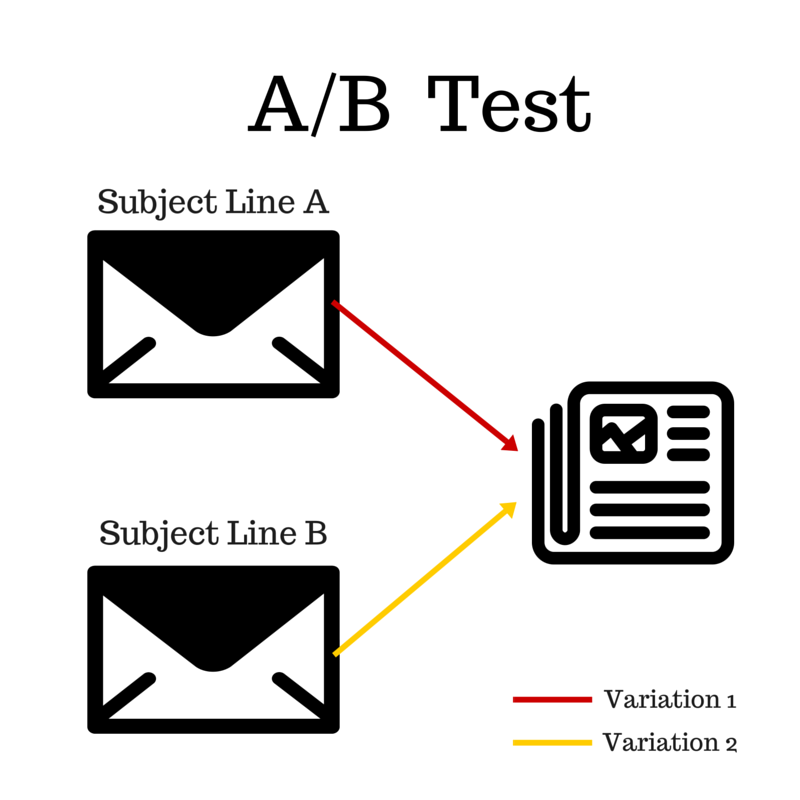

The goal of A/B testing is to identify how a change to a web page, advertisement, or email increases or decreases the likelihood of an outcome. For example, let’s say an ice cream shop owner has a database of 2,000 customer email addresses. The marketing-savvy owner of this ice cream shop would like to test his hypothesis that customers are more likely to open an email that promises a discount rather than an email that introduces this month’s new flavor. A simple A/B test will easily answer his question. In this example, the marketer should send 1,000 emails with a subject line offering a discount, and the other 1,000 emails should be sent a subject line announcing the new flavor. The subject line version with the highest open rate would be deemed the winner – proving or disproving his hypothesis. Conversely, the ice cream shop owner might want to run a mini test to prove or disprove his theory before sending the email to his entire database of 2,000 people. He might, for example, send subject line version A to 150 people, and subject line version B to a different group of 150 people. Based on the results of his mini test, he would send the winning version to the remaining 1,700 names in his database.

Multivariate Testing

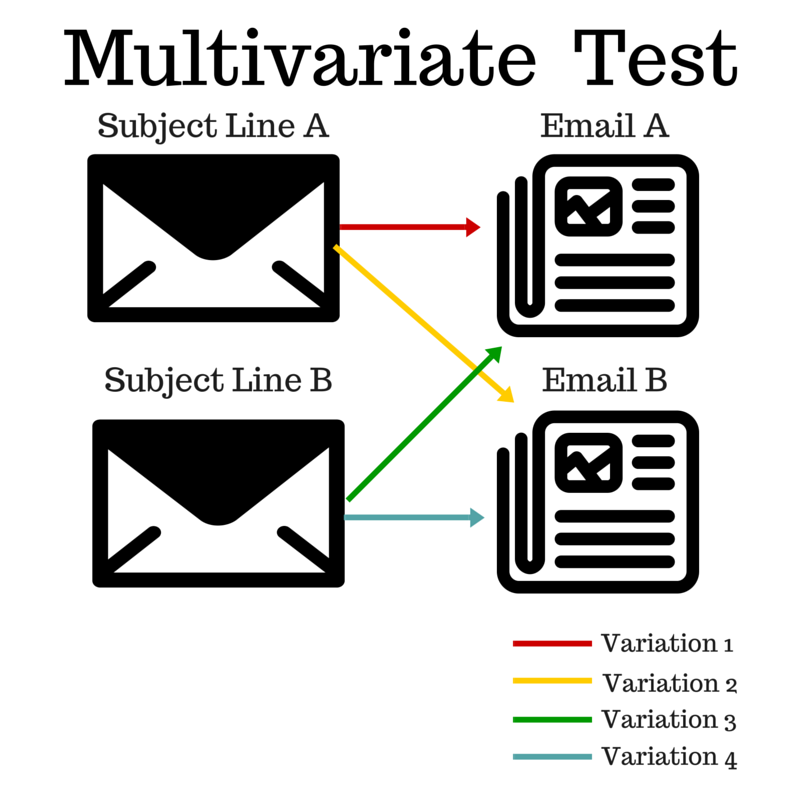

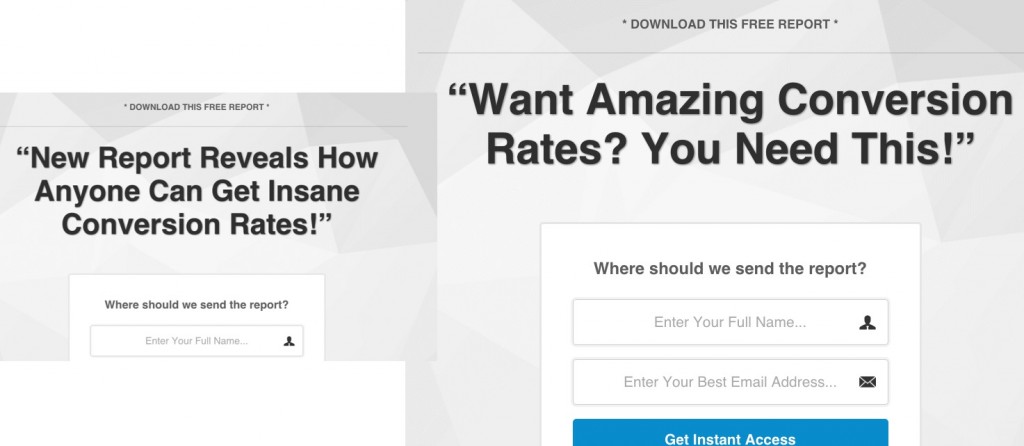

The example described above is a simplistic version of A/B testing. Version A is pitted against version B. Winner takes all. Advanced digital marketers sometimes run complex A/B tests, testing multiple elements at one time. This is called a multivariate test. Multivariate testing is similar to A/B testing in that it is a form of hypothesis testing. The difference is that in an A/B test, the marketer changes only one element, such as an email subject line, and in a multivariate test, the marketer changes multiple elements, such as an email subject line and the image within the header of the email. The image below illustrates the difference between an A/B test and a multivariate test.

This is an important distinction – one that is not widely understood. When running a multivariate test, it is critical that each element is tested independently of one another. Testing each component separately allows marketers to narrow down the element that caused the greatest lift in performance. How do you this? Let’s back up to our previous example. The ice cream shop owner has decided he wants to test two email subject lines and two email images. In the chart above, these are labeled “Email A” and “Email B”. Notice how this calls for four variations instead of only two. Here’s another way of charting these test cells:

| Subject Line | Email Version | Variation |

| Subject line version A | Email version A | 1 |

| Email version B | 2 | |

| Subject line version B | Email version A | 3 |

| Email version B | 4 |

By combining your test elements and testing every possible variation, the ice cream shop owner can quickly draw conclusions about which element was the most persuasive to his customers. He can use that data to make decisions about future marketing messages – ensuring his marketing efforts are fully optimized based on real-world data.

Things to Test to Optimize Your Marketing

We’ve spent a lot of time talking about subject line and email optimization. Now, let’s review some other areas of your marketing mix that can easily be optimized through A/B testing or multivariate testing. The following is a list of ideas:

-

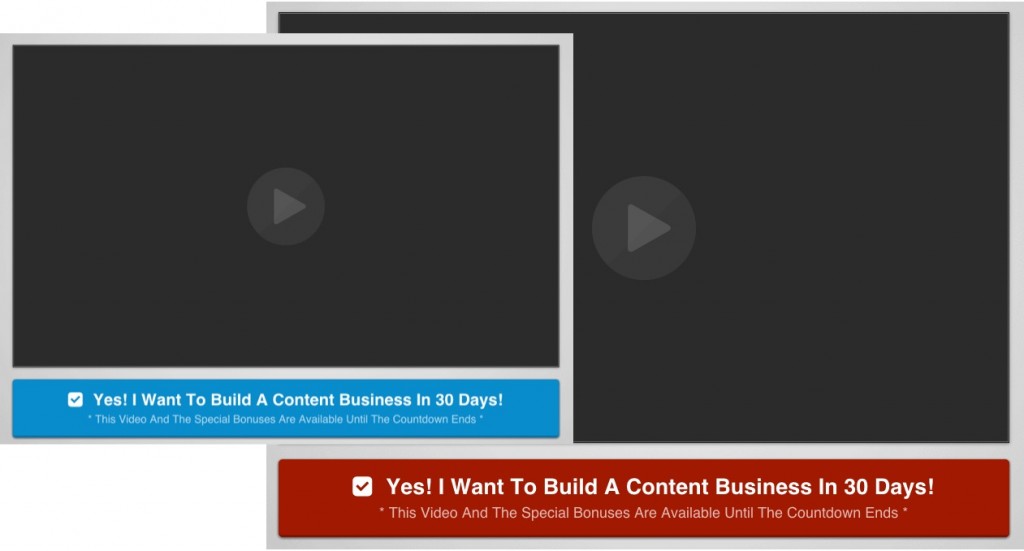

The button color on your web site

-

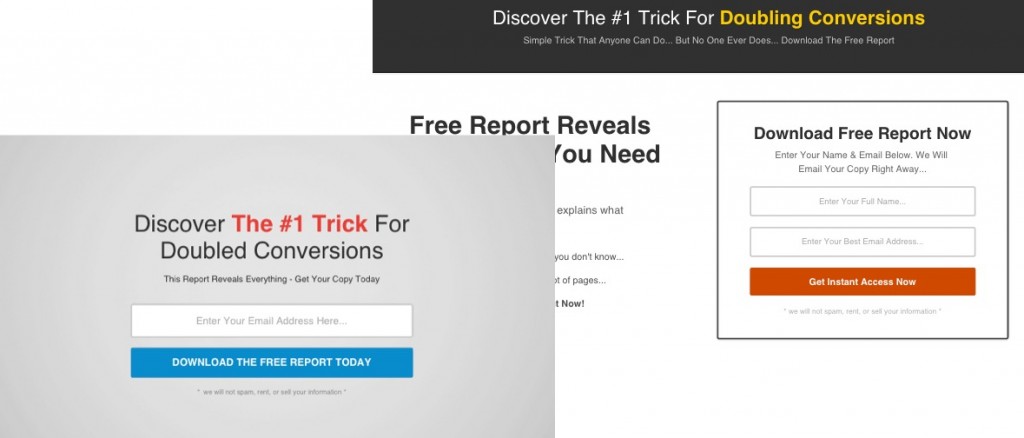

Use of white space on your landing pages

-

Headlines on the sub pages of your website

-

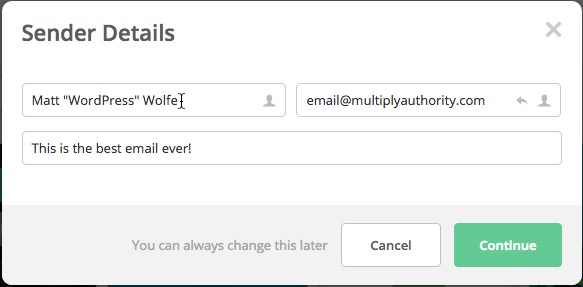

The “from name” displayed on your marketing emails

-

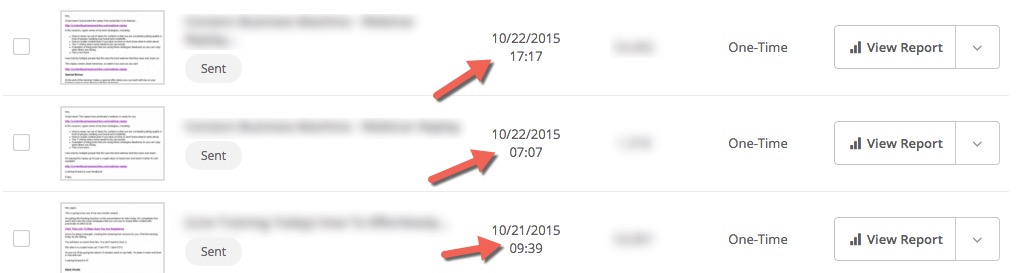

The time of day/evening your emails are sent

-

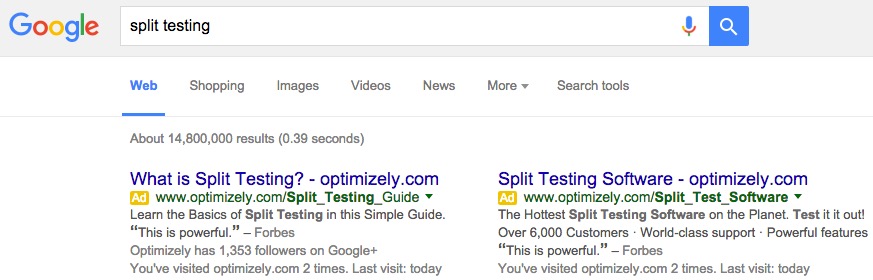

The copy on your search engine advertisements

-

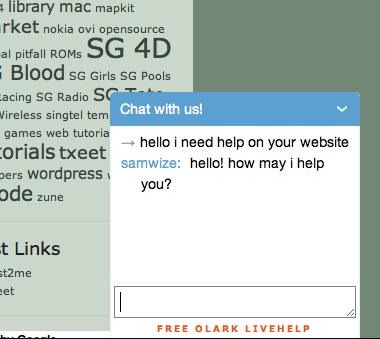

Inclusion of a “chat with us” feature on your website

-

The presence of a free sample or trial

-

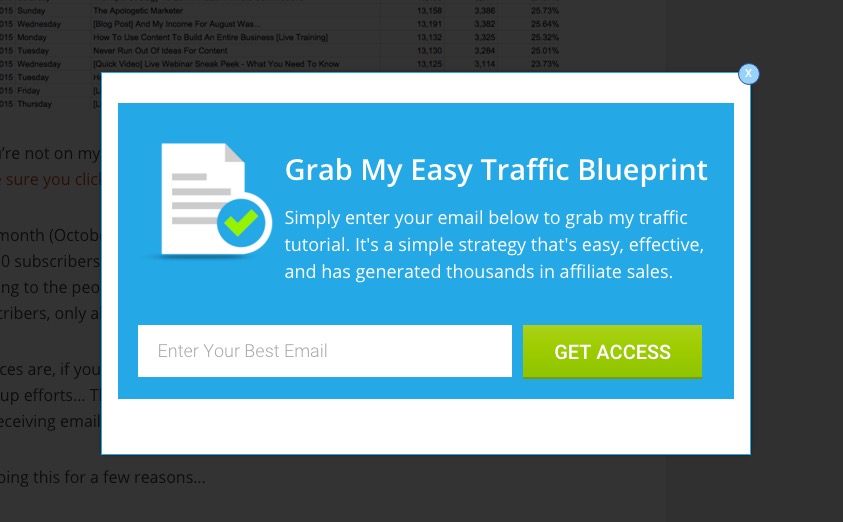

A pop-up window prompting users to join your newsletter

When executing these tests, remember only to test one element at a time. Or, if you decide to test multiple elements, always test each element independently. In other words, every possible variation must be tested so that your results are reliable. There are some software platforms that make this process easy. These include Visual Website Optimizer, Optimizely, and Mixpanel.Note from the Editor: If you use tools like Clickfunnels, LeadPages, or Unbounce to build your landing pages, they have built in split-testing. (Clickfunnels Vs LeadPages)

Calculating Confident Results

In the world of conversion rate optimization (CRO), there is a concept known as statistical significance. Sometimes referred to as “confidence scoring,” statistical significance is the degree to which you can expect the same result to occur if the same test were to run again in the future. Scoring comes into play usually in the form of a percentage. For example, if your data is scored as 95% confident, that means you can run the same test 100 more times and confidently expect the same result to occur at least 95 times. As a rule of thumb, marketers should always strive for at least a 90% confidence score before making decisions based on a given set of data. You don’t need to be a statistician to understand confidence scoring. All you need is a reliable online calculator and a decent sample size. Fortunately, I’ve taken the liberty of finding the calculator for you here. You’re on your own for the population sample (more on that in a future post).

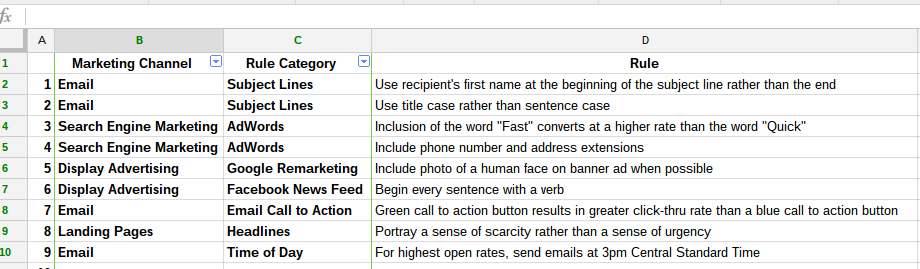

Keep Track of Your Test Results

This might seem obvious, but it’s worth mentioning because so many marketers get it wrong. Once you test an element of your marketing mix, and you identify confident results, keep track of your findings in a format that is easily sortable. Personally, I rely on a simple spreadsheet.

Marketing May Never Be The Same

Running experiments and making decisions based on data is about as old school as it gets. It’s the scale and the speed at which data is available to marketers that is game-changing. With appropriate use of online analytics tools, and willingness to look past one’s personal biases, marketers can transform organizations and drive unprecedented growth.Note from the editor:This post will become a “go to” resource on split-testing. If there's any questions you have about A/B testing or if you want other demonstrations or screenshots, let us know in the comments below. This, like other posts, will be an ever-evolving resource for you to continually check back to on the topic.